When working with large datasets that are complex to get or rarely change, we can use caching to reduce the load on our database.

For this, we will use the Cache class.

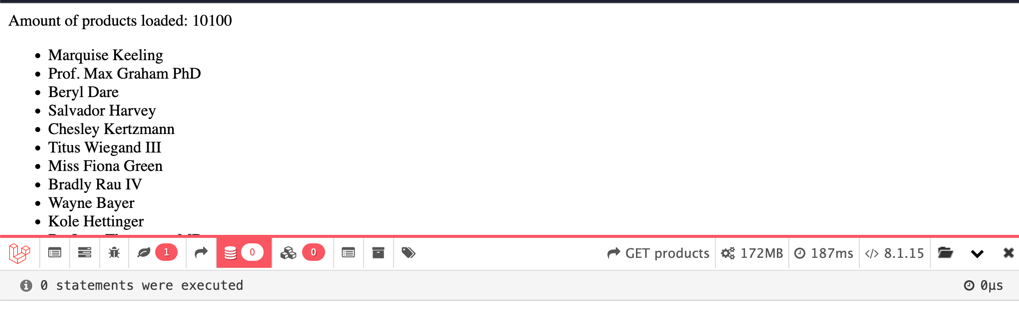

In the examples, we will try to optimize the database call that takes 10 000 records from it.

Caching basics

To cache the dataset, use the Cache::remember() function:

app/Http/Controllers/ProductsController.php:

use Illuminate\Support\Facades\Cache; // ... Cache::remember('KEY', TIME_IN_SECONDS, function () { return Model::get(); // or any other eloquent query});Caching model results

For example, we will get all records from Product model and cache them for 60 minutes.

app/Http/Controllers/ProductsController.php:

use Illuminate\Support\Facades\Cache; // ... public function index(){ $products = Cache::remember('products_list', 60 * 60, function () { return Product::get(); }); return view('products', compact('products'));}This results in no database call: results are taken from the cache.

Caching more complex results

What if you want to cache by some condition, like by category?

Let's add a condition to our previous example: filter by category and cache those results for 60 minutes, while keeping the original list intact.

app/Http/Controllers/ProductsController.php:

use Illuminate\Support\Facades\Cache;use Illuminate\Http\Request; // ... public function index(Request $request){ if ($request->has('category')) { $products = Cache::remember('products_list_' . $request->input('category'), 60 * 60, function () use ($request) { return Product::where('category', $request->input('category'))->get(); }); } else { $products = Cache::remember('products_list', 60 * 60, function () { return Product::get(); }); } return view('products', compact('products'));}As you can see, our key name becomes 'products_list_' . $request->input('category').

This way we will have a different cache key for each category and avoid overriding our default list of products.

Removing cached results

To remove cached data, call a Cache::forget() function with the key to remove.

app/Http/Controllers/ProductsController.php:

use Illuminate\Support\Facades\Cache; // ... Cache::forget('products_list');Removing cached results after retrieving the data

Sometimes, you know that data needs to be updated on the next user request. Then use pull to retrieve stored data and then clear it.

app/Http/Controllers/ProductsController.php:

use Illuminate\Support\Facades\Cache; // ... public function index(){ $products = Cache::pull('products_list', 60 * 60, function () { return Product::get(); }); return view('products', compact('products'));}Auto-Clear Cache on Model Changes

Keep in mind: caching is going to retrieve the latest version of a model from the cache, even if you update it in the database. So, if you want to update the cache, remove it manually or use model observers to do it for you.

As an example your code might look something like this:

app/Models/Product.php:

use Illuminate\Support\Facades\Cache; // ... protected static function booted(){ static::created(function () { Cache::forget('products_list'); }); static::updated(function () { Cache::forget('products_list'); });}

Whit the model observer isnt it save to use caching all over the place? Data is always cached and if it gets changed or updated, it will be cleared again. Am I missing something?

Pretty much, yes. But still double-check everything :)