Uploading the project for the first time

To make the project work on client's server, you need to do these things:1. Upload the files via FTP

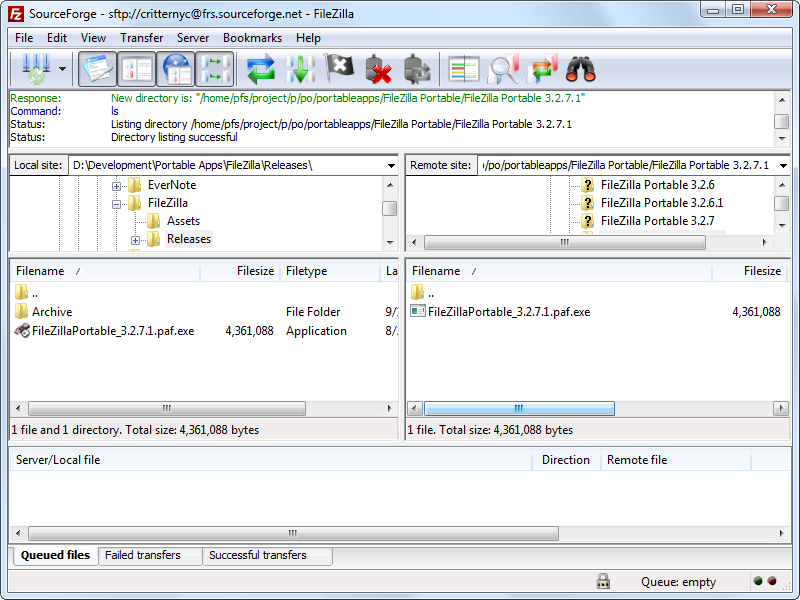

Use Filezilla or similar software

Use Filezilla or similar software

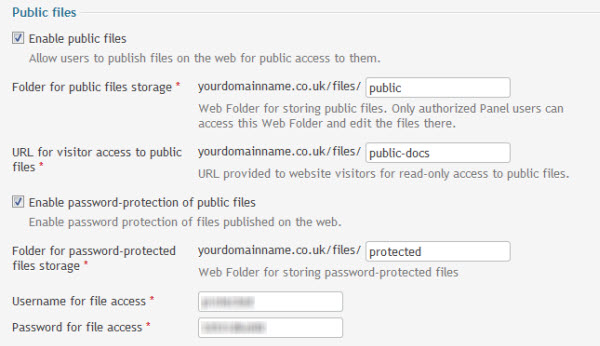

2. Make sure your domain is pointing to /public folder

Depending on where the domain is managed - it will be some kind of Plesk, DirectAdmin or similar software on the client's server panel.

Depending on where the domain is managed - it will be some kind of Plesk, DirectAdmin or similar software on the client's server panel.

3. Database: export/import with phpMyAdmin

Export your local database into SQL file, and then just import it to the server, as is.

Export your local database into SQL file, and then just import it to the server, as is.

4. .env file or config/database.php

Edit one of these files to have correct credentials to login to the database. Ta-daaa - you have your project up and running! But that's only first part of the story.Maintaning the project and uploading changes

Ok, now - second phase: how to properly upload changes, without breaking anything?1. How to upload files

This is pretty simple, right? Just upload the files that have been changed via FTP and refresh the browser. Not so fast. What if we have a lot of files in different folders and we want to minimize downtime? If you just bulk-upload 100+ files, for some seconds or even minutes the website will become unpredictable. It won't even be down, it's worse - its status will change depending on the file(s) uploaded at that exact moment. Imagine a situation: visitor might see old Blade template with old form, but by the time it's filled in - new Controller might work on new set of fields. The thing is that while deploying, we have to make sure that website is totally not accessible in those important seconds. Visitors should see "under construction" or "in progress" or something. If we had command-line SSH access, it would be easy:php artisan down git pull php artisan upAnd then while "down", visitors would see this:

But we don't have SSH access. So how do we "fake" artisan down command? What does it actually do?

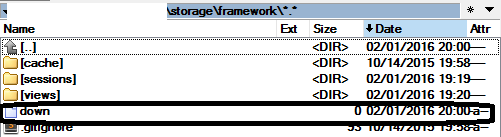

It creates an empty file called down (without extension) in storage/framework folder:

But we don't have SSH access. So how do we "fake" artisan down command? What does it actually do?

It creates an empty file called down (without extension) in storage/framework folder:

So to "fake" it, you just need to create empty file with name down, and that's it. Whenever you've uploaded all the necessary files - just delete the down file.

So to "fake" it, you just need to create empty file with name down, and that's it. Whenever you've uploaded all the necessary files - just delete the down file.

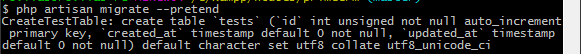

2. How to manage database migrations

Basically, when you prepare migration, you need to run same SQL query on live server with phpMyAdmin. But how do you know what that query is? All you can see is migration code which works "by magic". There's a helpful option for artisan migrate command. Locally, you should run it with --pretend option. Instead of actually running the command, it would show SQL for it! And then you copy-paste it into SQL field of server phpMyAdmin, and you're done.

And then you copy-paste it into SQL field of server phpMyAdmin, and you're done.

3. Composer package updates

Ok, you need a new package. Or a new version of existing package. Basically, you've run composer install or composer update locally, and now you need to transform it to the server. In this case, you can just upload the whole /vendor folder, and that's it. But if your internet connection is slower, or you don't want to upload whole tens of megabytes and thousands of files (yes, vendor folder is that heavy), you should know what is actually changed there. All you actually need to upload and overwrite is 3 things:- Folders of packages that were ACTUALLY changed - looked at their modified time locally;

- Whole folder of /vendor/composer

- File vendor/autoload.php

Basically, that's it - these are main tips to work on shared-hosting. But my ultimate advice is to avoid it in any way possible. It's a real pain in the neck - you could easily break something or overwrite wrong file. Expensive mistakes. Talk to client upfront about server requirements, and if their argument is additional costs, then your argument should be the same: just look at how much more time it takes to deploy files to shared-hosting. Your client would be much happier if you spend this time on actually CREATING something, not deploying.