In Laravel, dispatching a Job to the Queue is simple. But what about 5,000 jobs at once? Will it crash the server? How can we optimize it? We tried this experiment, and this article will tell you the results.

The task is to send THIS invoice PDF to the customer.

That is, to 5,000 customers. You know, that day of the month when you need to send monthly invoices to everyone? So yeah, we tried to mimic exactly that.

Here's the plan for this tutorial:

- No Queue: will generating PDFs crash the server?

- Moving to Queue and measuring the time

- Optimization 1: Increase Queue Workers

- Optimization 2: Bump up Server Specification

- Optimization 3: Decrease Queue Workers (wait, what?)

- Conclusion (for now)

At each step, we will take measurements and draw our conclusions.

Notice: In this tutorial, we measure the queues on the same server as the web application itself. We may potentially write a follow-up article on how to separate/scale queues on different servers.

Let's dive into the code and its (configuration) optimization!

1. Execute with No Queue

Here's our Controller for building PDFs for all users. For now, in a simple foreach loop, with no Queued Jobs:

Controller

class InstantGenerationExampleController extends Controller{ public function __invoke() { $orders = Order::query() ->select(['id', 'user_id', 'order_number', 'total_amount', 'created_at', ]) ->with([ 'user:id,name,email', 'items:id,order_id,product_id,quantity,price', 'items.product:id,name', ]) ->get(); $imageData = base64_encode(file_get_contents(public_path('logo-sample.png'))); $logo = 'data:image/png;base64,' . $imageData; Benchmark::dd(function () use ($logo, $orders) { foreach ($orders as $order) { $pdf = PDF::loadView('orders.invoicePDF', ['order' => $order, 'logo' => $logo]); $pdf->save(storage_path('app/public/' . $order->user_id . '-' . $order->id . '.pdf')); } }, 5); }}Notice 1: To measure performance, in all our examples, we will use the Laravel Benchmark helper with 5 iterations. This means we will run our code 5 times and get the average time it took.

Notice 2: for now, we are running this on the cheap Digital Ocean server with these specs:

Later in the tutorial, we will upgrade the server and see what happens. But, for now... What happens if we run this in sync with no queues?

THIS.

It stops with a timeout. That is expected since we are trying to generate 5,000 PDFs in one go, and it's too much for the request to handle.

We haven't even seen the results of Benchmark::dd(): the server went out of RAM.

So what's the solution?

The obvious answer is the Queue, with a few workers executed simultaneously. Let's try it.

2. Moving to Queue

Let's move our logic into a Queue Job using Laravel Horizon and Redis. For this, we need to modify our .env file:

.env

QUEUE_CONNECTION=redisHere's our Controller:

Controller

class QueueGenerationController extends Controller{ public function __invoke() { $orders = Order::query() ->pluck('id'); Benchmark::dd(function () use ($orders) { foreach ($orders as $order) { dispatch(new SendOrderInvoicePDF($order)); } }); }}As you can see, we no longer build the PDFs immediately. Instead, we dispatch the Job to the queue.

Notice: if you haven't used Queues before, we have a course Queues in Laravel for Beginners and a more advanced one Practical Laravel Queues on Live Server.

Now, here are the contents of the Job class:

app/Jobs/SendOrderInvoicePDF.php

class SendOrderInvoicePDF implements ShouldQueue{ use Dispatchable, InteractsWithQueue, Queueable, SerializesModels; private Order $order; public function __construct(public int $orderID) { $this->order = Order::query() ->select(['id', 'user_id', 'order_number', 'total_amount', 'created_at', ]) ->with([ 'user:id,name,email', 'items:id,order_id,product_id,quantity,price', 'items.product:id,name', ])->findOrFail($orderID); } public function handle(): void { $imageData = base64_encode(file_get_contents(public_path('logo-sample.png'))); $logo = 'data:image/png;base64,' . $imageData; $pdf = PDF::loadView('orders.invoicePDF', ['order' => $this->order, 'logo' => $logo]); $pdf->save($this->order->user_id . '-' . $this->order->id . '.pdf', 'public'); $this->order->user->notify(new PDFEmailNotification($this->order->user_id . '-' . $this->order->id . '.pdf')); }}We've already applied some optimizations, like eager-loading only the necessary data. Let's see how long it takes to run our benchmarks, separately looking at how long it takes to dispatch jobs and to execute them from Queue.

Experiment no.1: Only 1 PDF

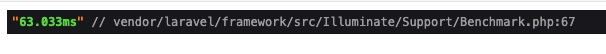

Dispatching time:

Job execution time:

Note: We run 5 iterations to get the average time.

So, if it's just one PDF, the Job is executed quickly, in 0.1-0.2s.

Let's run it with more PDFs.

Experiment no.2: 10 PDFs

Dispatch time:

Job execution time:

Hmm... Our time spent executing jobs grew quite a lot. This means a few jobs are running at the same time, putting more load on the server.

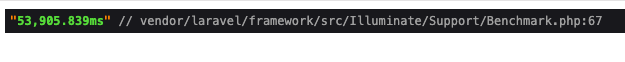

Experiment no.3: all 5,000 PDFs

So, 5,000 jobs were dispatched in ~54 seconds.

Note: To run this, we had to increase the PHP timeout from 30 seconds to a few minutes.

So yeah, first conclusion: even dispatching a lot of jobs is a time-consuming process, even before they get executed.

Now, the Jobs are in the queue, and we can monitor their performance with Laravel Horizon.

Look at the image above: our server handles only 32 jobs per minute on average.

And look at the Max Wait Time - it's 2 hours! That's not acceptable.

What can we do?

The first thing to try is to increase the number of Queue Workers.

3. Increase Queue Workers

The default number for Horizon is 10 workers. Let's bump it up to 50.

config/horizon.php

// ... 'environments' => [ 'production' => [ 'supervisor-1' => [ 'maxProcesses' => 10, 'maxProcesses' => 50, 'balanceMaxShift' => 1, 'balanceCooldown' => 3, ], ], 'local' => [ 'supervisor-1' => [ 'maxProcesses' => 3, ], ],], // ...Note: After this change, you must restart Horizon.

Let's try again with 5,000 PDFs:

Well, it's better!

- Before: 32 jobs per minute and 2 hours wait

- After: 95 jobs per minute and 15 minutes wait

But it's still relatively slow. What else can we do?

Let's bump up the server hardware.

4. Upgrading Server Specs

All the benchmarks were made on this cheap Digital Ocean droplet with 1vCPU and 1GB of RAM.

Let's upgrade it to a more powerful one, 8x RAM and 4 vCPUs:

Now, with 4vCPU and 8GB of RAM, let's try to run our benchmark for 5,000 PDFs again:

Great, 591 jobs per minute, 6x increase in performance!

But actually... we bumped up the specs 8x and more vCPUs, so I expected a much more significant gain. Is there anything else we can do?

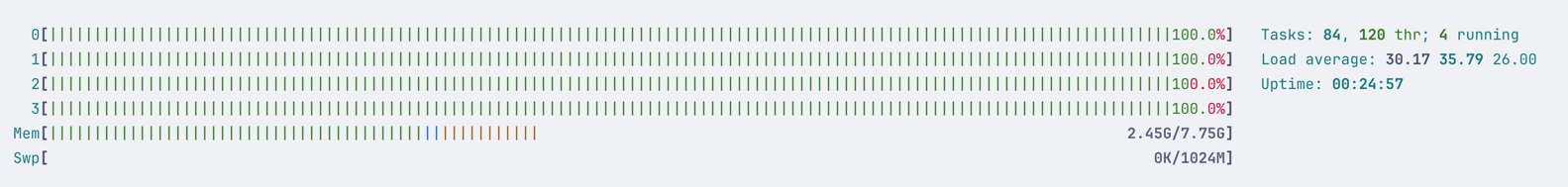

Let's look at the server load:

Yes, we upgraded the server, but we are loading it up to 100%.

Also, I've tried clicking around the website in the background, and it's not loading well. The queue worker processes are blocking the resources for the web app on the same server. This is not good.

Let's try to experiment with queue workers a bit more.

5. CORRECT Amount of Queue Workers

With our last attempt, we upgraded to 50 workers, but it seemed too much for our server to handle: worker management became the bottleneck.

The issue is that our server needs to keep each worker active and feed it with jobs. It needs to:

- Maintain worker processes

- Keep the Queue running

- Get the data from Redis

- Get the data from the Database

Of course, all of this load comes out only when we have a massive number of jobs. For regular usage, the Queue would downscale to save resources. But in our case, how do we find the correct number of workers? In our experience, it's trial and error.

Let's try to lower the number of workers to 30:

The result is immediately 3x better: 1,467 jobs per minute!

But let's look at the server load:

It's still 100%.

So let's try to lower it even more - to 10 workers (back to Horizon default):

Even better: 1,915 jobs per minute, and Max Wait Time is decreasing.

Also, I've re-tried clicking around the website in the background, and the main website is loading fine, so it is not blocked by the queue workers anymore. That was one of the main goals.

Of course, we still have almost 100% server load, but both the web app and the queue seem to be working well.

6. Conclusion (For Now)

This is the end of our experiment with queues and workers.

Conclusions:

- For bigger background jobs, a queue is a MUST

- Upgrading the server is likely the quickest way to solve queue performance problems (after the code itself is optimized)

- You may increase the number of Queue Workers, but worker process management then may become a bottleneck in itself

Our approach might have some flaws. For example:

- We are simply splitting big tasks into multiple smaller tasks. But the workload is still roughly the same.

- We don't have huge PDFs. Those may be more complex and make the issue bigger.

However, our goal with this experiment was to show you how this could be done, from simple foreach() loops to a more complex Queue system, and how we can optimize our tasks to run faster and more efficiently.

And as always, there might be other solutions to our problem. We can try:

- Moving Queue workers to a separate server: that way, we have a dedicated Queue server that can handle the load and prevent our main server from crashing.

- Buying even better hardware: We can find the sweet spot for our server specs and see how it handles the load.

- Optimizing our code to the max: We can try to find the bottlenecks in our code and optimize them. Would a different PDF library, for example, work better?

Could those be the topics for follow-up tutorials and "part 2" of this experiment? Please be active in the comments if you want this to happen.

I like your approach. Excited to see more.

I'm not 100% sure that I will answer correctly, so feel free to re-ask for more information!

Thanks for the reply!

I was kinda vague before. What I really wanna know: how does performance change when you scale worker servers by size and number?

Like:

Got any numbers or examples on this? Would help to see how it plays out.

My main takeaways gained from your blog post so far:

So, the more workers and servers you have - the faster it will be. That's kinda the nature of more stuff.

Imagine it like filling a pool. If you have 1 pump and 1 pipe - you will have just X amount of water going in. Now, if you add another pipe - the pressure will drop, but the amount of water will not change. Exactly the same happens with workers. Adding more workers - drops the pressure from one (making it run smoother), but it doesn't affect how the server has to deal with it. And it can even be worse.

Now, add 10 pumps and 10 pipes - you have gained 10x performance for the same amount.

It's basic explanation, but 90% of explanation how it works :)

As for the other suggestion to check for us - that might be a

part 2of this experiment :) We specifically focused on the1 server many workersfor now, to show how decisions can change performance/speed of the jobs :)Ah, now the penny’s dropped for me too :)

So no matter how much I scale or add instances, jobs will still push the server to its limits, eating up CPU and RAM based on my config, right?

Can I throttle or adjust worker jobs so the server stays under x% CPU/RAM?

What’s the best way to run jobs safely without wrecking the server? Would love to hear how you’d handle this.

Not quite. So, the workers amount is set in place and it is your maximum load. Lets say you have 5 workers - they will not take all the resources, but they will handle the queue slower. Thats pretty much it :)

Throttling - I am not sure, never had a need for it.

As for job run safety - just pick correct amount of workers or offload to separate server/servers.

We will see if part 2 comes - we might cover these concerns there in detail. Thanks for asking and feel free to add more questions!

Got it :)

Workers set the cap. Queue stacks up.Can slow things down. Gotta test it and see what works. Feels like art :)

Looking forward to part 2!

Great content. I would try to move the queue to a separate server. Fot this example, considering that is a specific workload in an specific day of month, it could be an spot instance to reduce the costs.